Enhance AI experiences with multi-modal multi-agentic systems

In a world where time is scarce and interruptions are constant, the way we work is ripe for transformation. According to Microsoft’s latest Work Trend Index, 80% of professionals globally say they lack the time or energy to do their jobs effectively. With an average of 275 interruptions per day – including 58 chats after hours and a 16% annual increase in late-night meetings – it’s no wonder productivity is under pressure.

Yet, while the pace of business and technology accelerates, our workflows often lag behind. Even with AI tools in place, 53% of global leaders believe their organizations need a productivity boost. So, how do we bridge this gap?

The answer lies in intelligent delegation – aka AI agents.

How Are AI Agents Driving Real-World Impact Across Industries?

This shift doesn’t happen overnight. It’s a gradual evolution, and those who embrace it will thrive. The Work Trend Index outlines a three-phase journey toward becoming a “Frontier Firm”:

- Phase 1: AI acts as an assistant, removing the monotony of work and helping people do the same tasks better and faster. This is the phase we’re generally in today—where we use AI models to write documentation, emails, summarize meetings, and develop code.

- Phase 2: Agents join teams as digital coworkers, taking on specific tasks under human direction. These agents equip employees with new capabilities that help scale their impact, freeing them up to take on new and more valuable work.

- Phase 3: Humans set the direction for agents that manage entire business processes and workflows, stepping in only when needed – for example, to handle exceptions or manage human relationships.

This journey isn’t strictly linear: in many cases, organizations will find themselves operating in all three phases at once. Also, new roles will arise – the agents managers, requiring new skills to develop and manage agents.

This transformation spans multiple use case and business types. Some examples where intelligent agents are already making a difference are:

- Healthcare: Accelerating drug discovery and clinical trials through data analysis and simulation.

- Energy & Manufacturing: Enabling predictive maintenance and automating supplier coordination.

- Travel & Hospitality: Personalizing travel experiences and automating bookings.

- Retail: Enhancing product discovery and optimizing inventory management.

Single agent and multi-agent systems

Breaking down the agent architecture, we can say that it includes 3 main components:

- A large-language model (which in some more complex architectures might be more than one).

- A set of instructions to ‘teach’ the model how to behave and how to use the tools at its disposal.

- A set of tools, that enable the model to retrieve data and use it to ground its responses, invoke 3rd party services and perform actions on behalf of the user.

An agent also maintains a memory, usually in the form of a conversation thread.

Agents might require input from humans to complete a task, but they can also cooperate with each other in multi-agentic systems, to ensure:

- Flexibility – agents can be reused across different workflows or composed into new systems; workflows are easily extended as business requirements evolve and new agents are added.

- Scalability – tasks can be distributed among multiple agents: more agents can handle more tasks simultaneously without bottlenecks.

- Specialization – agents can be fine-tuned for modular tasks or domains, improving overall performance and making the system easier to build, test and maintain.

Performance – Multiagent frameworks tend to outperform singular agents, ensuring fault tolerance and a mechanism of peer review.

How Can You Build Custom AI Agents with Azure?

In the Microsoft ecosystem there’s several approaches you can take to build agents, depending on your needs:

- IaaS: develop your agent with maximum control and customization using the Microsoft Agent Framework (the converged runtime of Semantic Kernel + Autogen), or another framework of your choice, and then deploy it to the cloud and monitor over time.

- PaaS: build your agent with the Azure AI Foundry Agent Service (via a UI or an SDK), which balances flexibility with ease of use and provides managed orchestration, secure threading, and integration with several tools such as OpenAPI, Microsoft Fabric, and Logic Apps.

- SaaS: get started with building agents in minutes with low-code/no-code tools and built-in features using Microsoft Copilot Studio.

In this article, we are going to deep dive into the first two approaches.

1. Start with a multi-modal single agent

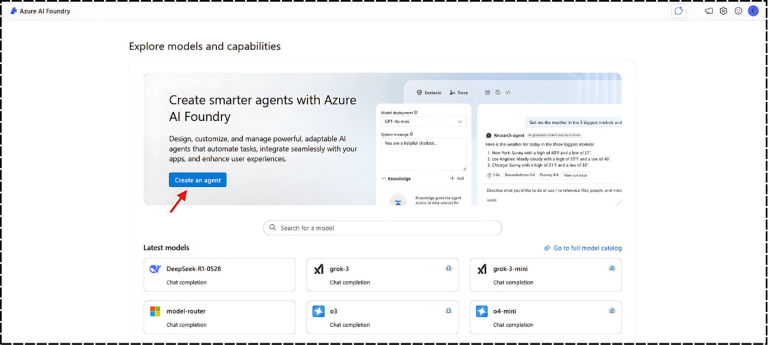

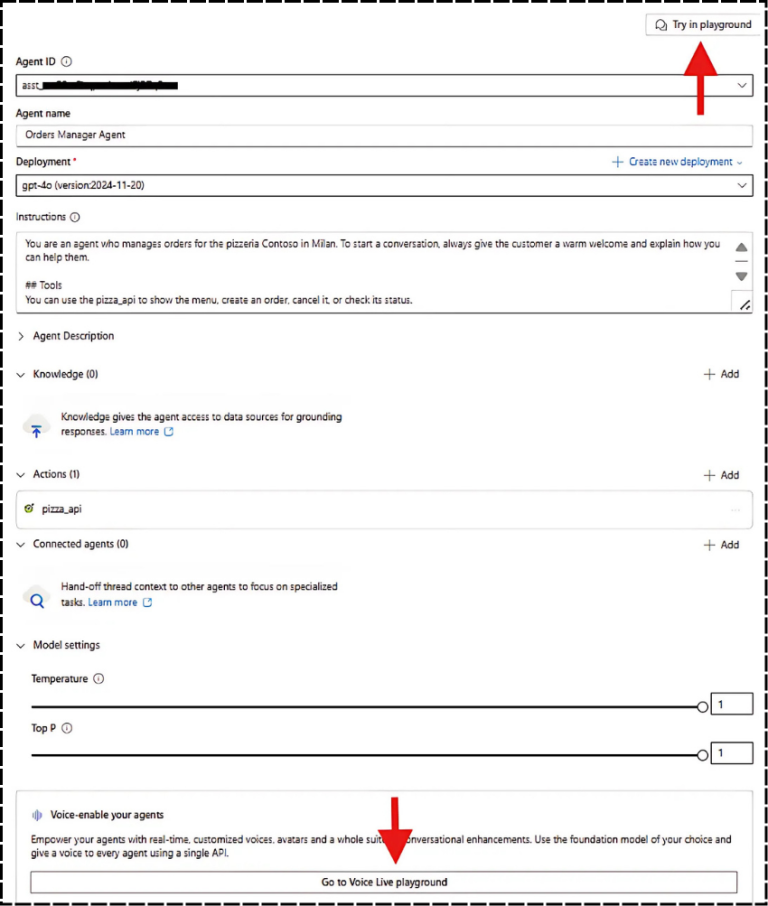

Imagine you’ve been tasked to enhance the customer care service of a Pizzeria Restaurant to automate orders management through AI. To quickly get started and easily prototype your AI agent you navigate to the Azure AI Foundry Portal, you click on the ‘Create an agent’ button on the landing page and you get an agent configuration panel where you can customize the agent’s name, description, instructions and tools.

Here’s an example:

- Name: Orders Manager Agent

- Description: An agent to assist with restaurant orders management

- Instructions: Provide detailed instructions on how the agent should interact with customers (e.g. “Give customers a warm welcome”), and handle orders (e.g. “Use this api to create a new order”).

- Tools: You can add tools like a custom api schema via OpenAPI connector, to handle specific tasks like getting the menu list, creating and checking order status.

Note that this guided process automatically creates an Azure AI Foundry Project – which is your workspace to build and manage your AI solution – and a gpt-4o model instance for your agent, a multimodal model by OpenAI. If you want to experiment with different models, you can deploy the model of your choice within the project and then switch to the new instance from the agent builder panel.

And that’s it: you’ve got your orders manager agent prototype! To test it via chat, click on the ‘Try in playground’ button at the top in the configurator panel. On the other hand, for a more immersive experience, scroll down and click on ‘Go to Voice Live playground’, where you can have a natural sounding voice conversation with the agent you just built.

2. Connect with other agents

The agent you built can support with illustrating the items in the menu, creating orders and getting order status. However, if the user asks questions about the services offered by the pizzeria (e.g. dine-in or home delivery) or its opening hours, the agent could come up with ungrounded responses, as the agent doesn’t have this information.

To mitigate this issue, you might add this knowledge directly through a data connector to your single agent. However, in some cases this might result in overloading your single agent with too many skills, ultimately leading to a performance loss. An alternative is building focused, reusable agents that collaborate seamlessly—scaling both performance and maintainability.

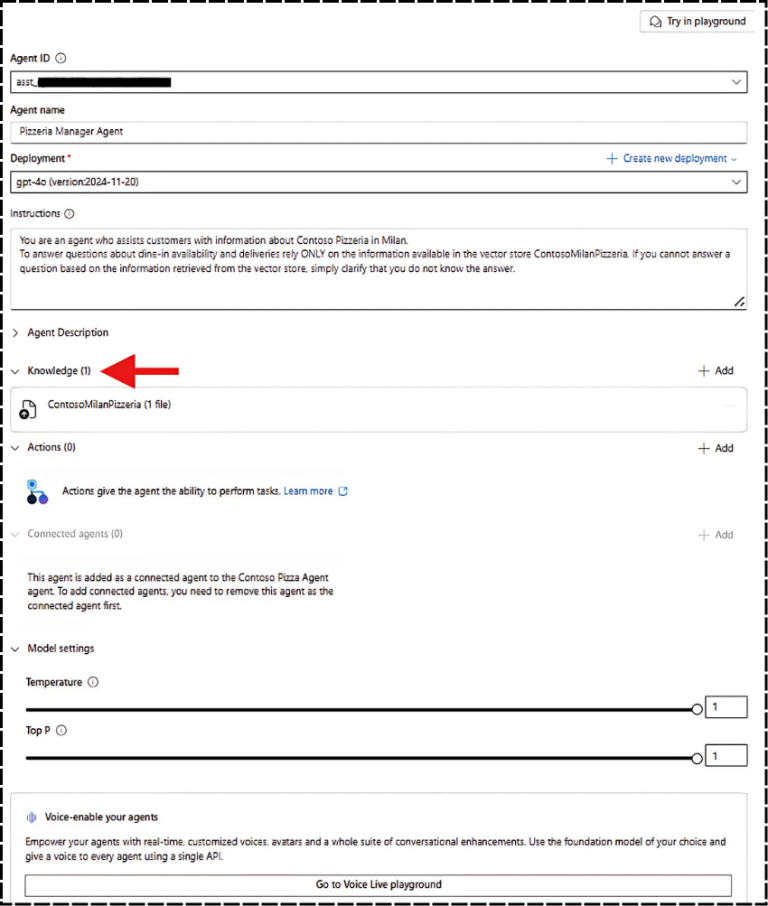

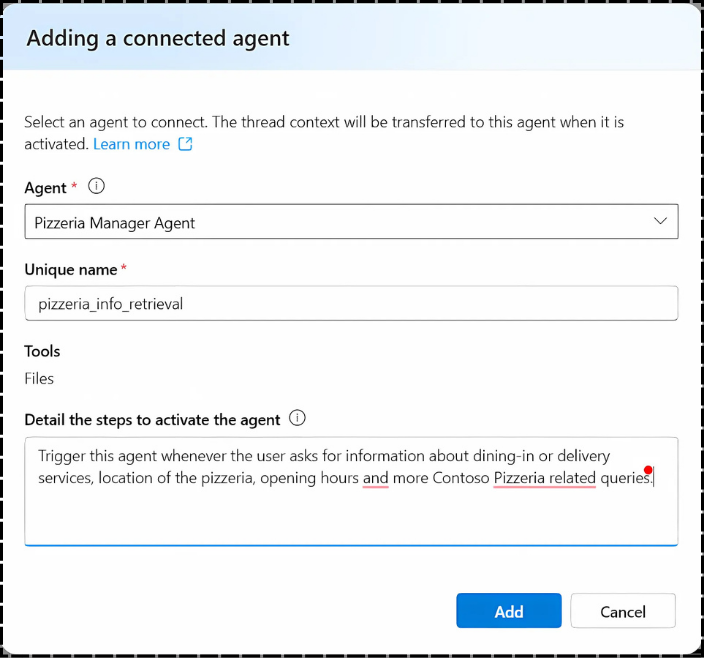

In our example scenario, you can create a new Pizzeria Manager Agent, which specializes in assisting with information about pizzeria services and opening hours. This new agent is grounded on business data – for example by uploading on-premises files via File search, which are then hosted in a Vector Store to ensure optimized retrieval.

Once you get your second agent, you can connect it to your Orders Manager Agent, from the ‘Connected Agents’ section of the agent builder, by specifying with natural language instructions when to trigger that specialized agent.

Now, every time the user will ask information about dine-in services, home deliveries or opening hours, the system will delegate those questions to the connected sub-agent, while the main agent will handle all the other queries. The two agents will share the same conversation thread, having access to the same context and history.

3. Orchestrate multiple agents together

Connected Agents enable developers with the simplest way to break down complex tasks into coordinated, specialized roles, without the need for hand-coded routing logics, because sub-agents are managed as tools. Connected agents leverage an LLM driven kind of orchestration, so you are specifically not controlling the order in which these agents are invoked, how many times they’re invoked, or what parameters or values are used to invoke them; all of those things are sort of left up to the LLM to decide.

However, there are cases where you want to have a custom orchestrator to have more control over the workflow execution. For example, you might want to ensure that your multiple agents execute sequentially, in a way the result of one agent goes into the next agent, or you want to create a group-chat like conversation between multiple agents, coordinated by a group manager, to implement some work reviewer patterns. To achieve this, you can rely on the Semantic Kernel Agent Framework, which offers multiple agent orchestration patterns as well as the possibility to create custom ones.

In our retail scenario, let’s pretend we want to orchestrate 3 agents in such a way as they work simultaneously in a concurrent pattern:

- The Customers Welcome Agent – a no tools agent which simply greets the customers and provides information about the restaurant.

- The Orders Manager Agent we previously built in Azure AI Foundry, imported in the code via its agent id.

- The Allergen Checker Agent – an agent which analyzes the order details and provide information about potential allergens present in the items.

The Python code to define the 3 agents and add them into a concurrent pattern with the Semantic Kernel Agent framework would look like:

agent_id = os.getenv(“AZURE_AI_AGENT_ID”)

agent_endpoint = os.getenv(“AZURE_AI_AGENT_ENDPOINT”)

client = AzureAIAgent.create_client(credential=DefaultAzureCredential(), endpoint=agent_endpoint)

# Agent 1 – Contoso Pizzeria Customers Welcome Agent

customers_welcome_agent = ChatCompletionAgent(

name=”CustomersWelcomeAgent”,

description=”Greets customers and provides information about the restaurant.”,

instructions=”Welcome the customer and provide a brief overview of the Contoso Pizzeria services offerings, based in Milan, close to the Central Station. Be friendly and engaging.”,

kernel=create_kernel()

)

# Agent 2 – Contoso Pizzeria Orders Manager Agent

agent_definition = await client.agents.get_agent(agent_id=agent_id)

orders_manager_agent = AzureAIAgent(client=client, definition=agent_definition, name=”OrdersManagerAgent”)

# Agent 3 – Contoso Pizzeria Allergen Checker Agent

allergen_checker_agent = ChatCompletionAgent(

name=”AllergenCheckerAgent”,

description=”Checks order request and identifies potential allergens.”,

instructions=”Analyze the order details and provide information about potential allergens present in the items.”,

kernel=create_kernel()

)

# Orchestrate agents concurrently

orchestrator = ConcurrentOrchestration(members=[customers_welcome_agent, orders_manager_agent, allergen_checker_agent])

Given an input from the user – the details of the order – the 3 agents are executed in parallel, and you get their outputs once all the agents have completed their tasks. The complete code sample is available at https://aka.ms/multi-agents-sample.

What’s Next: Open Protocols and Interoperability

As the multi-agent landscape continues to evolve, open protocols like Model Context Protocol (MCP)—a standard for enabling AI agents to discover, reason over, and invoke tools—and Agent2Agent (A2A)—a protocol for dynamic, context-aware communication between agents—are becoming essential for building flexible, interoperable agent systems. These standards empower developers to seamlessly integrate agents across different platforms, fostering collaboration and task delegation without proprietary lock-in. Microsoft is committed to embracing these open protocols to ensure developers have the flexibility to build modular, cross-platform agent ecosystems.

Azure AI Foundry Agent Service supports MCP, allowing developers to plug in any MCP-compliant server with zero custom code. It also exposes the A2A API head, enabling open-source orchestrators to connect with Foundry Agent Service agents without requiring custom integrations. Additionally, Semantic Kernel supports both MCP and A2A, enabling developers to define and consume OpenAPI-based tools within their agent workflows via MCP, and to compose dynamic, multi-agent workflows using A2A.

Prepare yourself to master Agentic AI

As you embark on your journey into the world of agentic AI, remember that the tools and frameworks are evolving rapidly—and so are the opportunities. Whether you’re building your first single-agent prototype or orchestrating complex multi-agent workflows, embracing open protocols like MCP and A2A will ensure your solutions are future-ready, interoperable, and scalable.

To continue your learning, explore our curated resources:

- Learn how to navigate into the wide offerings of AI models – your agents starting point – by joining experts every week at Model Mondays

- Grasp agents’ fundamentals and start building AI Agents with AI Agents for Beginners course

- Dive into MCP for Beginners course and tune in for MCP Bootcamp series to understand the protocol powering tool interoperability.

- Join the Azure AI Foundry dev communities to connect with peers, share insights, and stay ahead of the curve.

- Watch the accompanying course on demand for free: https://learning.datacouch.io/course/enhance-ai-experiences-with-multimodal-multi-agentic-systems

FAQs

- What is a multi-agentic AI system?

A multi-agentic AI system uses multiple specialized agents that collaborate to complete complex tasks more efficiently, with better performance and scalability. - How do Azure tools help in building AI agents?

Azure offers IaaS, PaaS, and SaaS options—from full control using the Microsoft Agent Framework to no-code tools like Copilot Studio—for building flexible AI agents. - What are the benefits of multi-agent systems over single agents?

Multi-agent systems improve task specialization, scalability, fault tolerance, and are easier to maintain and evolve over time. - Can I integrate external tools with my Azure AI agents?

Yes, Azure agents can connect with OpenAPI, Microsoft Fabric, Logic Apps, and even third-party services using built-in connectors. - What protocols ensure agent interoperability?

Open standards like MCP and A2A enable agents to discover tools and communicate across systems, ensuring flexibility and avoiding vendor lock-in.